Search Exchange

Search All Sites

Nagios Live Webinars

Let our experts show you how Nagios can help your organization.Login

Directory Tree

check_hadoop-dfs.sh

Owner

Website

Hits

153899

Files:

| File | Description |

|---|---|

| check_hadoop-dfs.sh | The script (Version 1.0) |

| get-dfsreport.sh | Code snippet that needs to be sudo'd |

| LICENSE | The appropriate license |

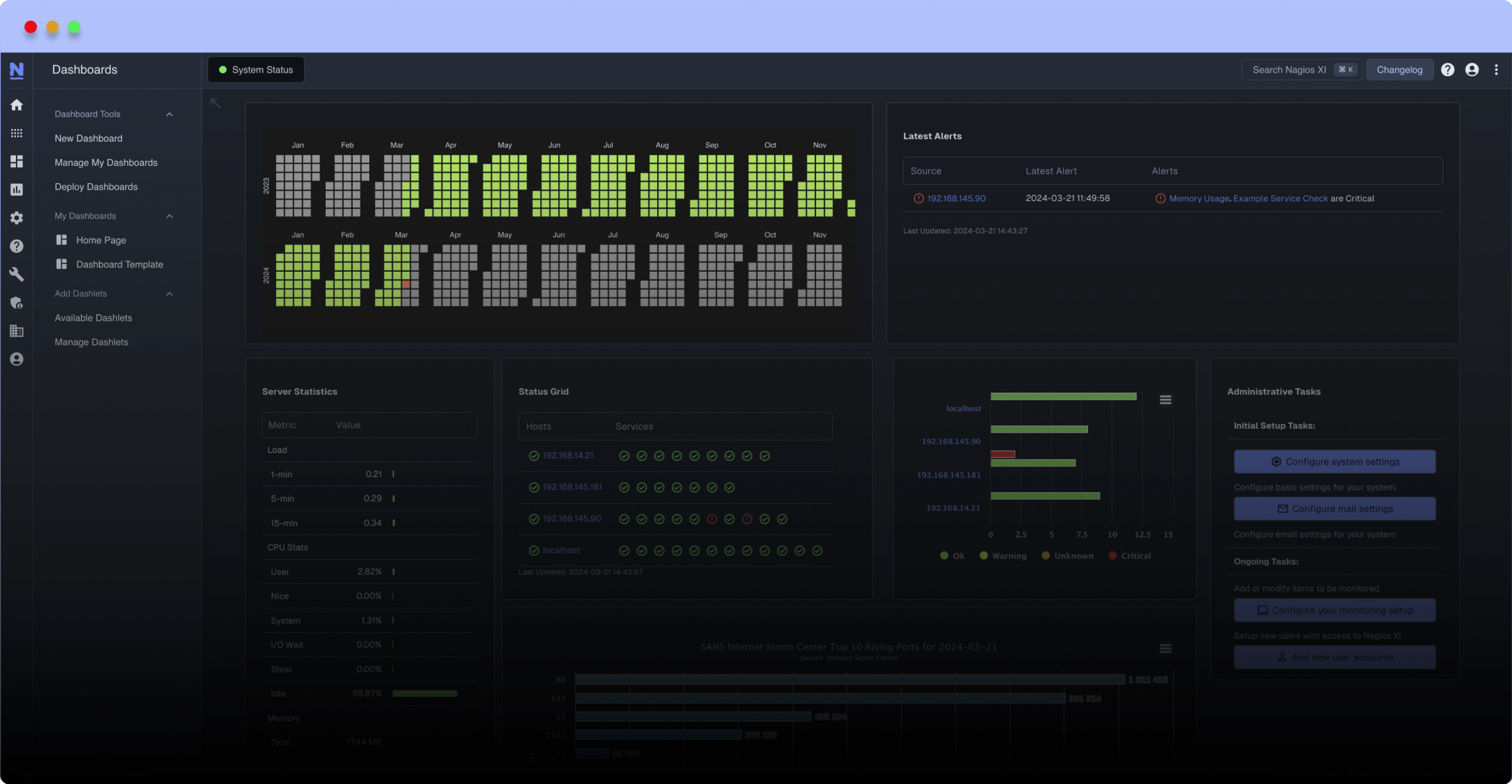

Meet The New Nagios Core Services Platform

Built on over 25 years of monitoring experience, the Nagios Core Services Platform provides insightful monitoring dashboards, time-saving monitoring wizards, and unmatched ease of use. Use it for free indefinitely.

Monitoring Made Magically Better

- Nagios Core on Overdrive

- Powerful Monitoring Dashboards

- Time-Saving Configuration Wizards

- Open Source Powered Monitoring On Steroids

- And So Much More!

Description

Highly work in progress since I'm currently occupied with kicking off a large Hadoop cluster and the checks are written en passant. Please note that there's a small shell snippet that needs to be sudo'd by the Nagios user to get the actual dfs statistics. This is because I didn't want to give hadoop related permissions to the Nagios user.

Put the small snippet attached below in a directory of your choice (configurable via -s/--path-sh), but name it get-dfsreport.sh and make it read, write and accessible by root only. Then enable the Nagios user via /etc/sudoers (or better visudo) to run the script.

Version

Version 1.0, 2009, Mike Adolphs (http://www.matejunkie.com/)

-h/--help Output

check_hadoop-dfs.sh is a Nagios plugin to check the status of HDFS, Hadoop's

underlying, redundant, distributed file system.

check_hadoop-dfs.sh -s /usr/local/sbin -w 10 -c 5

Options:

-s|--path-sh)

Path to the shell script that is mentioned in the

1. Default is: /usr/local/sbin

-w|--warning)

Defines the warning level for available datanodes. Default

is: off

-c|--critical)

Defines the critical level for available datanodes. Default

is: off

Output Example

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin

OK - Datanodes up and running: 50, DFS total: 20147365 MB, DFS used: 0 MB (0%) | 'datanodes_available'=50 'dfs_total'=20147365 'dfs_used'=0

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin -w 40 -c 30

OK - Datanodes up and running: 50, DFS total: 20147365 MB, DFS used: 0 MB (0%) | 'datanodes_available'=50 'dfs_total'=20147365 'dfs_used'=0

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin -w 60 -c 40

WARNING - Datanodes up and running: 50, DFS total: 20147365 MB, DFS used: 0 MB (0%) | 'datanodes_available'=50 'dfs_total'=20147365 'dfs_used'=0

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin -w 70-c 60

CRITICAL - Datanodes up and running: 50, DFS total: 20147365 MB, DFS used: 0 MB (0%) | 'datanodes_available'=50 'dfs_total'=20147365 'dfs_used'=0

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin -w 20 -c 40

Please adjust your warning/critical thresholds. The warning must be higher than the critical level!

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin -w 30

Please also set a critical value when you want to use warning/critical thresholds!

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin -c 30

Please also set a warning value when you want to use warning/critical thresholds!

Highly work in progress since I'm currently occupied with kicking off a large Hadoop cluster and the checks are written en passant. Please note that there's a small shell snippet that needs to be sudo'd by the Nagios user to get the actual dfs statistics. This is because I didn't want to give hadoop related permissions to the Nagios user.

Put the small snippet attached below in a directory of your choice (configurable via -s/--path-sh), but name it get-dfsreport.sh and make it read, write and accessible by root only. Then enable the Nagios user via /etc/sudoers (or better visudo) to run the script.

Version

Version 1.0, 2009, Mike Adolphs (http://www.matejunkie.com/)

-h/--help Output

check_hadoop-dfs.sh is a Nagios plugin to check the status of HDFS, Hadoop's

underlying, redundant, distributed file system.

check_hadoop-dfs.sh -s /usr/local/sbin -w 10 -c 5

Options:

-s|--path-sh)

Path to the shell script that is mentioned in the

1. Default is: /usr/local/sbin

-w|--warning)

Defines the warning level for available datanodes. Default

is: off

-c|--critical)

Defines the critical level for available datanodes. Default

is: off

Output Example

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin

OK - Datanodes up and running: 50, DFS total: 20147365 MB, DFS used: 0 MB (0%) | 'datanodes_available'=50 'dfs_total'=20147365 'dfs_used'=0

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin -w 40 -c 30

OK - Datanodes up and running: 50, DFS total: 20147365 MB, DFS used: 0 MB (0%) | 'datanodes_available'=50 'dfs_total'=20147365 'dfs_used'=0

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin -w 60 -c 40

WARNING - Datanodes up and running: 50, DFS total: 20147365 MB, DFS used: 0 MB (0%) | 'datanodes_available'=50 'dfs_total'=20147365 'dfs_used'=0

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin -w 70-c 60

CRITICAL - Datanodes up and running: 50, DFS total: 20147365 MB, DFS used: 0 MB (0%) | 'datanodes_available'=50 'dfs_total'=20147365 'dfs_used'=0

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin -w 20 -c 40

Please adjust your warning/critical thresholds. The warning must be higher than the critical level!

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin -w 30

Please also set a critical value when you want to use warning/critical thresholds!

user@host: ~ $ ./check_hadoop-dfs.sh -s /var/nagios/home/bin -c 30

Please also set a warning value when you want to use warning/critical thresholds!

Reviews (0)

Be the first to review this listing!

New Listings

New Listings