Search Exchange

Search All Sites

Nagios Live Webinars

Let our experts show you how Nagios can help your organization.Login

Directory Tree

check_hadoop_hdfs_webhdfs.pl (Advanced Nagios Plugins Collection)

Compatible With

- Nagios 1.x

- Nagios 2.x

- Nagios 3.x

- Nagios 4.x

- Nagios XI

Owner

Download URL

Hits

24621

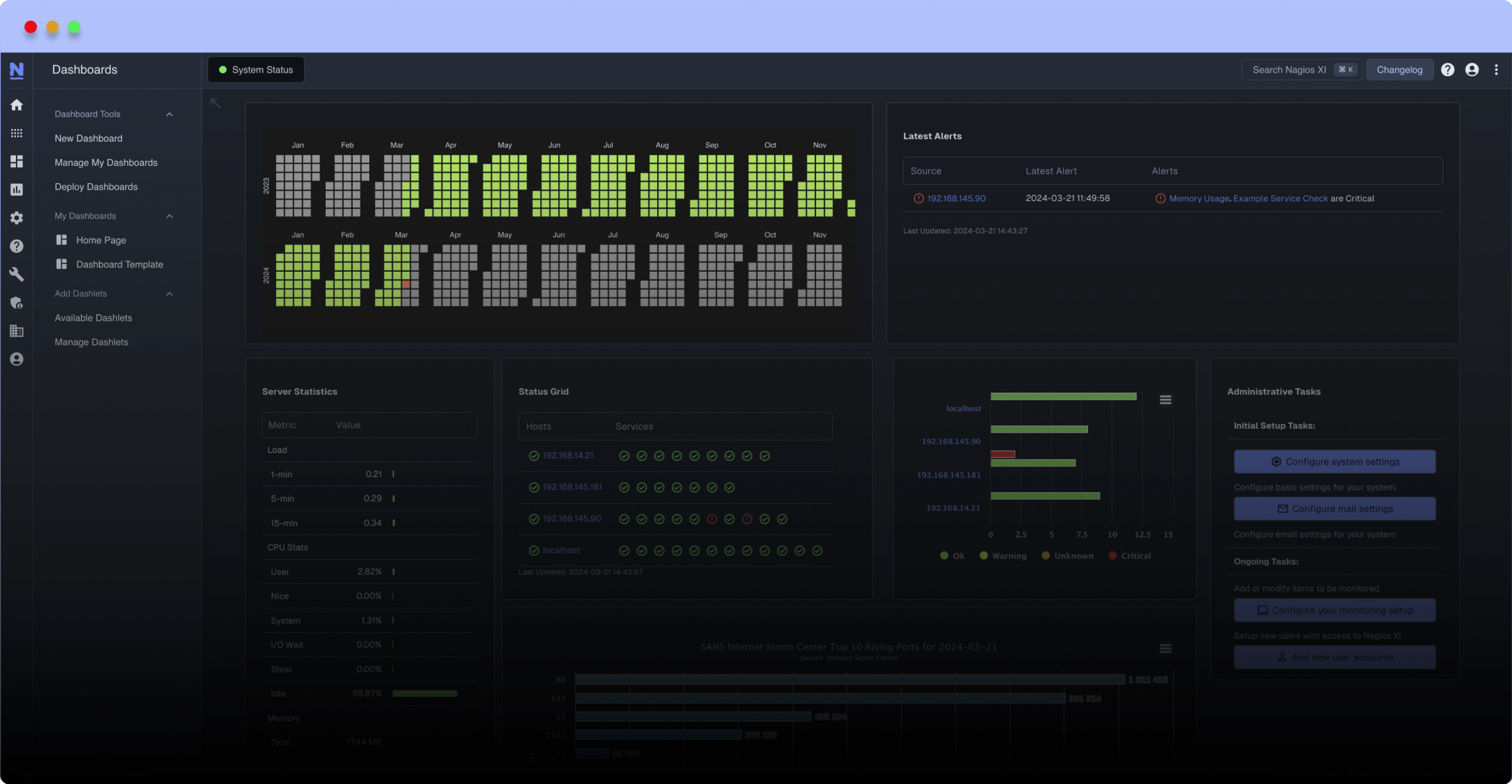

Meet The New Nagios Core Services Platform

Built on over 25 years of monitoring experience, the Nagios Core Services Platform provides insightful monitoring dashboards, time-saving monitoring wizards, and unmatched ease of use. Use it for free indefinitely.

Monitoring Made Magically Better

- Nagios Core on Overdrive

- Powerful Monitoring Dashboards

- Time-Saving Configuration Wizards

- Open Source Powered Monitoring On Steroids

- And So Much More!

Part of the Advanced Nagios Plugins Collection.

Download it here:

https://github.com/harisekhon/nagios-plugins

./check_hadoop_hdfs_webhdfs.pl --help

Nagios Plugin to check HDFS files/directories or writable via WebHDFS API or HttpFS server

Checks:

- File/directory existence and one or more of the following:

- type: file or directory

- owner/group

- permissions

- size / empty

- block size

- replication factor

- last accessed time

- last modified time

OR

- HDFS writable - writes a small canary file to hdfs:///tmp to check that HDFS is fully available and not in Safe mode (this means than enough DataNodes have checked in after startup)

Tested on CDH 4.5

usage: check_hadoop_hdfs_webhdfs.pl [ options ]

-H --host Hadoop NameNode host ($HADOOP_NAMENODE_HOST, $HADOOP_HOST, $HOST)

-P --port Hadoop NameNode port ($HADOOP_NAMENODE_PORT, $HADOOP_PORT, $PORT, default: 50070)

-w --write Write unique canary file to hdfs:///tmp to check HDFS is writable and not in Safe mode

-p --path File or directory to check exists in Hadoop HDFS

-T --type 'FILE' or 'DIRECTORY' (default: 'FILE')

-o --owner Owner name

-g --group Group name

-e --permission Permission octal mode

-Z --zero Additional check that file is empty

-S --size Minimum size of file

-R --replication Replication factor

-a --last-accessed Last-accessed time maximum in seconds

-m --last-modified Last-modified time maximum in seconds

-B --blockSize Blocksize to expect

-t --timeout Timeout in secs (default: 10)

-v --verbose Verbose mode (-v, -vv, -vvv ...)

-h --help Print description and usage options

-V --version Print version and exit

Download it here:

https://github.com/harisekhon/nagios-plugins

./check_hadoop_hdfs_webhdfs.pl --help

Nagios Plugin to check HDFS files/directories or writable via WebHDFS API or HttpFS server

Checks:

- File/directory existence and one or more of the following:

- type: file or directory

- owner/group

- permissions

- size / empty

- block size

- replication factor

- last accessed time

- last modified time

OR

- HDFS writable - writes a small canary file to hdfs:///tmp to check that HDFS is fully available and not in Safe mode (this means than enough DataNodes have checked in after startup)

Tested on CDH 4.5

usage: check_hadoop_hdfs_webhdfs.pl [ options ]

-H --host Hadoop NameNode host ($HADOOP_NAMENODE_HOST, $HADOOP_HOST, $HOST)

-P --port Hadoop NameNode port ($HADOOP_NAMENODE_PORT, $HADOOP_PORT, $PORT, default: 50070)

-w --write Write unique canary file to hdfs:///tmp to check HDFS is writable and not in Safe mode

-p --path File or directory to check exists in Hadoop HDFS

-T --type 'FILE' or 'DIRECTORY' (default: 'FILE')

-o --owner Owner name

-g --group Group name

-e --permission Permission octal mode

-Z --zero Additional check that file is empty

-S --size Minimum size of file

-R --replication Replication factor

-a --last-accessed Last-accessed time maximum in seconds

-m --last-modified Last-modified time maximum in seconds

-B --blockSize Blocksize to expect

-t --timeout Timeout in secs (default: 10)

-v --verbose Verbose mode (-v, -vv, -vvv ...)

-h --help Print description and usage options

-V --version Print version and exit

Reviews (0)

Be the first to review this listing!

New Listings

New Listings