Search Exchange

Search All Sites

Nagios Live Webinars

Let our experts show you how Nagios can help your organization.Login

Directory Tree

RAID - Hardware [LSI/3ware/Areca/Adaptec] / Software arrays and ZFS monitoring

Current Version

0.3

Last Release Date

2019-10-15

Compatible With

- Nagios 3.x

- Nagios 4.x

- Nagios XI

Owner

License

GPL

Hits

5573

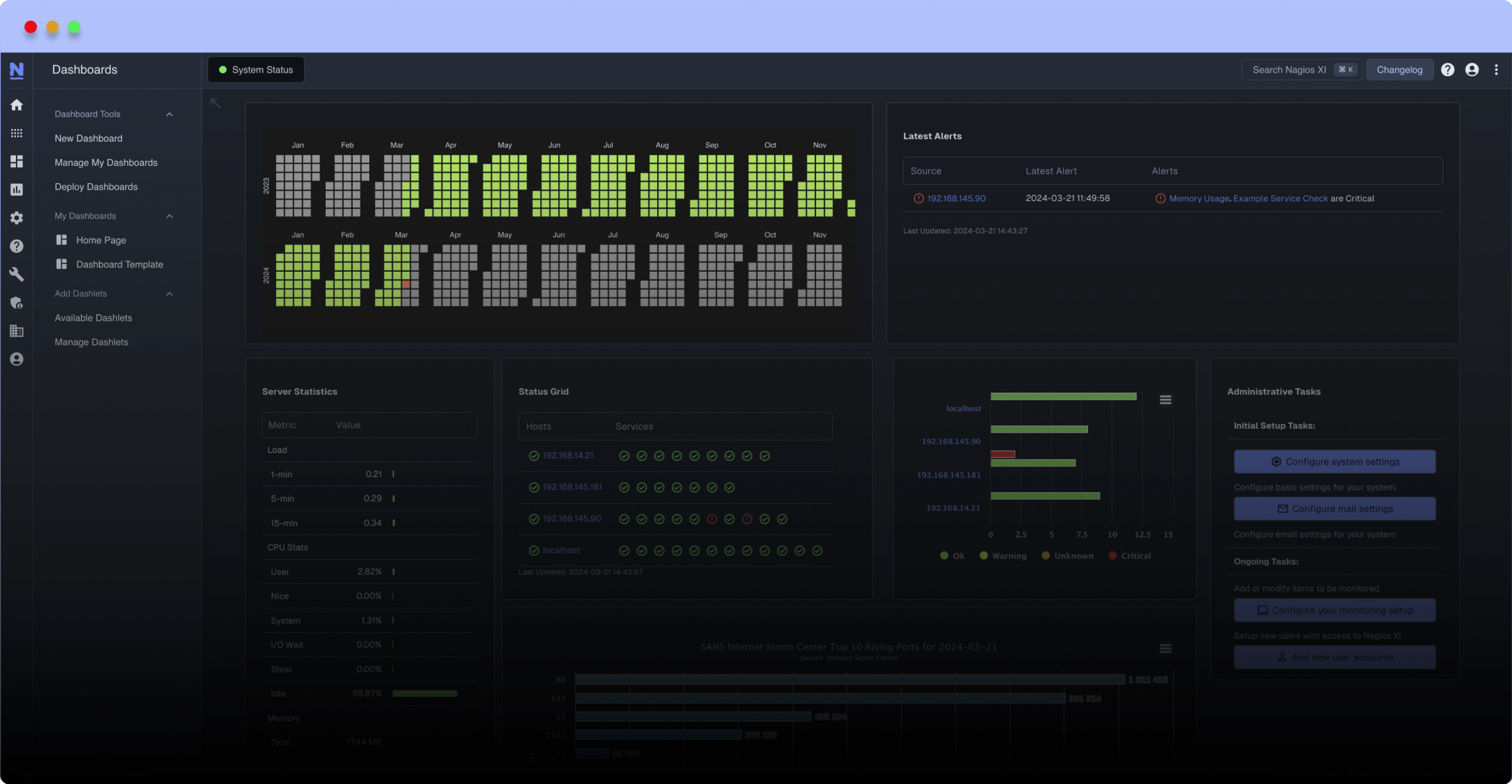

Meet The New Nagios Core Services Platform

Built on over 25 years of monitoring experience, the Nagios Core Services Platform provides insightful monitoring dashboards, time-saving monitoring wizards, and unmatched ease of use. Use it for free indefinitely.

Monitoring Made Magically Better

- Nagios Core on Overdrive

- Powerful Monitoring Dashboards

- Time-Saving Configuration Wizards

- Open Source Powered Monitoring On Steroids

- And So Much More!

This plugin will monitor health & missing components of:

- Hardware RAID (supported RAID controllers: LSI, 3ware, Areca, Adaptec):

- Controller itself, virtual drives, drive enclosures, physical drives in the RAID setup via S.M.A.R.T. reallocated sectors (0x05) value

- Software RAID via mdadm tool (arrays and drives)

- ZFS pools

- It will also monitor all the drives (via smartctl) detected in non-RAID / non-ZFS (or mixed) enviroments.

For this plugin to work you need to download RAID controller utilities and place them in /opt directory.

You can download the tools from official website or from my github: https://github.com/realasmo/bash/tree/master/nagios-plugins/check-storage/hwraid_utils

- - - - - - - - - - - - - - - - - - - - -

Example output:

Sofware RAID only (drive /dev/sdb in array md0 reports 716 realloc sectors):

[root@strdev3 ~]# ./check-storage.sh

[STORAGE][SWR]::Array:md2:Health: OK (state/failed_dev/removed_dev: clean/0/0):Array:md1:Health: OK (state/failed_dev/removed_dev: clean/0/0):Array:md0:Health: OK (state/failed_dev/removed_dev: clean/0/0):[STORAGE]drv:/dev/sda:Health: OK (realloc: 0):drv:/dev/sdb:Health: CRITICAL (realloc: 716):drv:/dev/sdc:Health: OK (realloc: 1):drv:/dev/sdd:Health: OK (realloc: 0):

Sofware RAID only (array md127 reports 4 removed drives):

[root@strdev5 ~]# ./check-storage.sh

[STORAGE][SWR]::Array:md127:Health: CRITICAL (state/failed_dev/removed_dev: active/0/4):[STORAGE]drv:/dev/sda:Health: OK (realloc: 0):drv:/dev/sdb:Health: OK (realloc: 0):

Hardware RAID (drive p0 reports ECC-ERROR):

[root@strdev2 ~]# ./check-storage.sh

[STORAGE][3Ware]::CTL: c0: Health: OK (NotOpt:0)::Unit: u0: Health: OK (Status: VERIFYING, type/size: RAID-10/1862.62GB)::Drive: p0: Health: CRITICAL (Status/ReallocSect: ECC-ERROR/0, VPort/Size/Type: p0/931.51GBGB/SATA)::Drive: p1: Health: OK (Status/ReallocSect: OK/0, VPort/Size/Type: p1/931.51GBGB/SATA)::Drive: p2: Health: OK (Status/ReallocSect: OK/0, VPort/Size/Type: p2/931.51GBGB/SATA)::Drive: p3: Health: OK (Status/ReallocSect: OK/0, VPort/Size/Type: p3/931.51GBGB/SATA)::[STORAGE]drv:0:Health: OK (realloc: 0):drv:1:Health: OK (realloc: 0):drv:2:Health: OK (realloc: 0):drv:3:Health: OK (realloc: 0):

Checks on unsupported RAID controller will be limited to drives & software arrays if present:

[root@strdev1 ~]# ./check-storage.sh

[HWR]:Found unsupported RAID card :: [STORAGE][SWR]::Array:md124:Health: OK (state/failed_dev/removed_dev: active/0/0):Array:md125:Health: CRITICAL (state/failed_dev/removed_dev: active/0/1):Array:md126:Health: CRITICAL (state/failed_dev/removed_dev: clean/0/1):Array:md127:Health: CRITICAL (state/failed_dev/removed_dev: clean/0/1):[STORAGE]drv:/dev/sdb:Health: OK (realloc: 0):drv:/dev/sdc:Health: OK (realloc: 0):drv:/dev/sdd:Health: OK (realloc: 0):drv:/dev/sde:Health: OK (realloc: 0):drv:/dev/sdf:Health: OK (realloc: 0):drv:/dev/sdg:Health: OK (realloc: 0):drv:/dev/sdh:Health: OK (realloc: 0):drv:/dev/sdi:Health: OK (realloc: 0):drv:/dev/sdj:Health: OK (realloc: 0):drv:/dev/sdk:Health: OK (realloc: 0):drv:/dev/sdl:Health: OK (realloc: 6):drv:/dev/sdm:Health: OK (realloc: 6):drv:/dev/sdn:Health: OK (realloc: 16):drv:/dev/sdo:Health: OK (realloc: 0):drv:/dev/sdp:Health: OK (realloc: 0):

Checks on ZFS pool:

[root@strdev4 ~]# ./check-storage.sh

[ZFS]::Health: CRITICAL (name/size/health: pool1/2.72T/DEGRADED):[STORAGE]drv:/dev/sda:Health: OK (realloc: S_NOATTR):drv:/dev/sdb:Health: OK (realloc: 0):drv:/dev/sdc:Health: OK (realloc: 0):drv:/dev/sdd:Health: OK (realloc: S_NOATTR):drv:/dev/sde:Health: OK (realloc: 0):drv:/dev/sdf:Health: OK (realloc: 0):drv:/dev/sdg:Health: OK (realloc: 0):

You can download the tools from official website or from my github: https://github.com/realasmo/bash/tree/master/nagios-plugins/check-storage/hwraid_utils

- - - - - - - - - - - - - - - - - - - - -

Example output:

Sofware RAID only (drive /dev/sdb in array md0 reports 716 realloc sectors):

[root@strdev3 ~]# ./check-storage.sh

[STORAGE][SWR]::Array:md2:Health: OK (state/failed_dev/removed_dev: clean/0/0):Array:md1:Health: OK (state/failed_dev/removed_dev: clean/0/0):Array:md0:Health: OK (state/failed_dev/removed_dev: clean/0/0):[STORAGE]drv:/dev/sda:Health: OK (realloc: 0):drv:/dev/sdb:Health: CRITICAL (realloc: 716):drv:/dev/sdc:Health: OK (realloc: 1):drv:/dev/sdd:Health: OK (realloc: 0):

Sofware RAID only (array md127 reports 4 removed drives):

[root@strdev5 ~]# ./check-storage.sh

[STORAGE][SWR]::Array:md127:Health: CRITICAL (state/failed_dev/removed_dev: active/0/4):[STORAGE]drv:/dev/sda:Health: OK (realloc: 0):drv:/dev/sdb:Health: OK (realloc: 0):

Hardware RAID (drive p0 reports ECC-ERROR):

[root@strdev2 ~]# ./check-storage.sh

[STORAGE][3Ware]::CTL: c0: Health: OK (NotOpt:0)::Unit: u0: Health: OK (Status: VERIFYING, type/size: RAID-10/1862.62GB)::Drive: p0: Health: CRITICAL (Status/ReallocSect: ECC-ERROR/0, VPort/Size/Type: p0/931.51GBGB/SATA)::Drive: p1: Health: OK (Status/ReallocSect: OK/0, VPort/Size/Type: p1/931.51GBGB/SATA)::Drive: p2: Health: OK (Status/ReallocSect: OK/0, VPort/Size/Type: p2/931.51GBGB/SATA)::Drive: p3: Health: OK (Status/ReallocSect: OK/0, VPort/Size/Type: p3/931.51GBGB/SATA)::[STORAGE]drv:0:Health: OK (realloc: 0):drv:1:Health: OK (realloc: 0):drv:2:Health: OK (realloc: 0):drv:3:Health: OK (realloc: 0):

Checks on unsupported RAID controller will be limited to drives & software arrays if present:

[root@strdev1 ~]# ./check-storage.sh

[HWR]:Found unsupported RAID card :: [STORAGE][SWR]::Array:md124:Health: OK (state/failed_dev/removed_dev: active/0/0):Array:md125:Health: CRITICAL (state/failed_dev/removed_dev: active/0/1):Array:md126:Health: CRITICAL (state/failed_dev/removed_dev: clean/0/1):Array:md127:Health: CRITICAL (state/failed_dev/removed_dev: clean/0/1):[STORAGE]drv:/dev/sdb:Health: OK (realloc: 0):drv:/dev/sdc:Health: OK (realloc: 0):drv:/dev/sdd:Health: OK (realloc: 0):drv:/dev/sde:Health: OK (realloc: 0):drv:/dev/sdf:Health: OK (realloc: 0):drv:/dev/sdg:Health: OK (realloc: 0):drv:/dev/sdh:Health: OK (realloc: 0):drv:/dev/sdi:Health: OK (realloc: 0):drv:/dev/sdj:Health: OK (realloc: 0):drv:/dev/sdk:Health: OK (realloc: 0):drv:/dev/sdl:Health: OK (realloc: 6):drv:/dev/sdm:Health: OK (realloc: 6):drv:/dev/sdn:Health: OK (realloc: 16):drv:/dev/sdo:Health: OK (realloc: 0):drv:/dev/sdp:Health: OK (realloc: 0):

Checks on ZFS pool:

[root@strdev4 ~]# ./check-storage.sh

[ZFS]::Health: CRITICAL (name/size/health: pool1/2.72T/DEGRADED):[STORAGE]drv:/dev/sda:Health: OK (realloc: S_NOATTR):drv:/dev/sdb:Health: OK (realloc: 0):drv:/dev/sdc:Health: OK (realloc: 0):drv:/dev/sdd:Health: OK (realloc: S_NOATTR):drv:/dev/sde:Health: OK (realloc: 0):drv:/dev/sdf:Health: OK (realloc: 0):drv:/dev/sdg:Health: OK (realloc: 0):

Reviews (1)

This plug-in provides so much useful information and to top it off, it works on main different RAID cards.

The output it provides helps me determine when it's time to replace a failing hard drive or when my software raid needs attention.

Highly recommended plug-in! Give this developer some feedback. Very well coded! Looking forward to more plugins from this developer.

The output it provides helps me determine when it's time to replace a failing hard drive or when my software raid needs attention.

Highly recommended plug-in! Give this developer some feedback. Very well coded! Looking forward to more plugins from this developer.

New Listings

New Listings